It's 8 AM. Your free sleep cycle alarm wakes you, and you check today's weather on your phone. By the time you've posted a beautiful sunrise picture with your morning cup of coffee on Instagram, your actions have been tracked, recorded, stored, and sold. But it's not just your data that has been recorded. Your nephew, colleague, aunt, and best friend have been recorded too. The entire society of smartphone holders has been recorded (which is the whole society except for the clever few resistant hippies). Why have millions and millions of people let mass surveillance happen? (HINT: it's some of the dangerous thoughts shown below)

In this article series, I want to uncover the psychological and cultural reasons behind the privacy problem of the internet:

- What thoughts and ideas have allowed tech companies to steal vast amounts of personal data?

- How did entire societies agree with global mass surveillance?

- Why are so many people OK with surveillance technology that sells their data to the highest bidder?

I'm curious how many times you will nod your head while reading this article: "yep, I thought that, and yep that was dumb." I know I've been guilty thinking of quite a few of them.

Together we'll explore some histories and scientific insights to shine a light on the privacy paradox and learn important insights about ourselves and society. Why? Because privacy is ultimately not a technology problem, it’s a problem caused by human decisions. I want to know why these decisions were made (without blaming anyone), so we can learn from them and correct our errors to protect everyone's fundamental right to privacy.

I believe the solution to a privacy-friendly internet is a step-by-step process:

- First, we need to understand ourselves, our mistakes in thinking, and our decisions.

- Then, we need to find better solutions to the question of what we want to achieve.

Do we want an internet filled with ads fighting for our attention? Or do we want to create the internet where people can learn, connect and solve fundamental problems freely?

- Why do users want to trade their privacy for using technology?

Why do users want to trade their privacy for using technology?

In this first article of this series, we'll look at the thoughts of internet users. Why do we want tools and technology so much that we're OK with trading our privacy to use them?

Below you'll find the first five dangerously common thoughts (or brain farts) I found:

(there are probably way more, let me know your ideas, and I'll write about them in later articles)

Thought 1: "I'm OK to give away some privacy if it's for the greater good."

As technology becomes more and more powerful, it might feel less and less reasonable to expect privacy. The thought goes something like this: "If technology knows everything about me and knows me better than I know myself, technology can help me make better decisions." Yet, this thought assumes that technology does what is best for you or that technology is neutral. Fun fact: it isn't. It is owned by people and institutions who have their own goals, and they have the power to shape technology to primarily suit their goals first.

Given what technology can do - and perhaps more importantly, what it will be able to do - it could seem as if wanting privacy would go against or slow down the improvement of technology.

But we must not forget one crucial truth here: People invented technology, and it should be there to serve people. When large institutions like corporations take hold of surveillance technology for their own goals, it might not always be for the "greater good," but it will first be for the good of that specific corporation.

Losing our privacy would do us a great disservice. For that reason, we cannot passively allow technological advancements to take it away from us in the name of "improving the quality of the world." In some ways, it might indeed contribute to the greater good, but we must ask ourselves: At what cost?

We shouldn't, under any circumstances, be forced into sacrificing our privacy, thinking, and decision-making for the betterment of technology.

Thought 2: Computers are way smarter than me.

It is undeniable that computers today are capable of extraordinary things, and their processing quality will likely keep increasing in the future. They can solve issues and calculations in seconds that would typically take multiple days for the human brain, and it costs them a fraction of the energy we would use to accomplish that. However...

...computers are not humans. They don't have emotions; they don't feel anything. They are unable to contextualize anything that happens in the world. They can calculate risks, but they can't calculate the emotional weight of a loss, nor the sensational impact of a victory. Life for them is nothing more but numbers arranged in particular ways. They depend on numerical models, not sensing the considerable chaos of reality like humans do. They do not feel the difference between destroying a kindergarten for a good goal or not being able to hold hands with the love of your life on a beautiful late-night only to increase average sleep hours.

These technological limitations are often unobserved or unthought of because human values are so inherently natural to us. Yet, just like for humans, there are "machine errors" - factors that are incalculable through numbers - and reactions or outcomes that are not easily translated into code. The result is that although they are highly potent at solving specific issues, we cannot and should not outsource every decision we can think of to a computer to reduce our workload. Some decisions do require human input, but if machines are used to - or allowed to - make them without "real-flesh" review, they could easily cause previously unprecedented troubles as technology improves.

(We're currently seeing this in the increased opposition and hatred between groups of people who instead want to click on another headline they agree with than have an honest and good talk with someone who has different beliefs. This situation has worsened, if not caused, by algorithms optimizing the metric of time users spend online.)

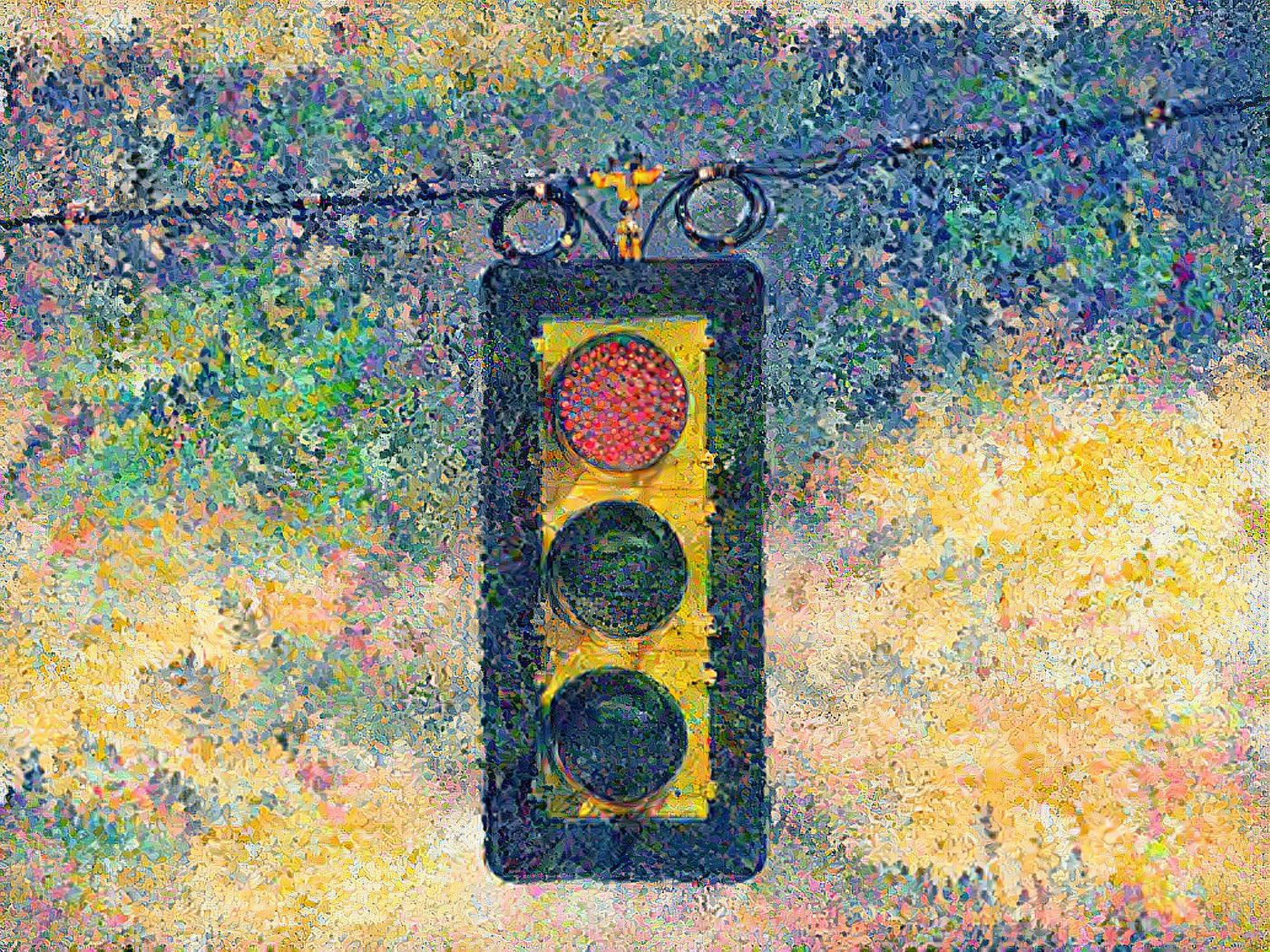

Thought 3: "These tools help us achieve our goals."

Albert Einstein once made a witty remark that perfectly describes this problem: "Perfection of means and confusion of goals seems to characterize our age."

When you're walking with a hammer, everything looks like a nail. The same is true for technology and the tools it gives. We see the world through the glasses of having these tools at hand, so we continuously look for ways to use them. Companies that have tools to track individuals are constantly looking for better ways. For example, when Google looks for tracking alternatives to replace cookies, it still wants to create profiles of its users. But why?

A healthier approach would be to ask "what do we want to accomplish?" instead of looking at our tools and asking how we can use them in our pursuits.

A great example would be the installation of red-light cameras in the city. At first sight, it looks like a very reasonable step against drivers who don't take traffic rules seriously: They get punished financially. However, over time the city may start to rely on the income generated by these transgressions and become more and more interested in giving fines than stopping drivers from running red lights. The problem is not solved, but at least it's capitalized. The focus could then shift towards increasing the cameras' effectiveness because it can bring in more money without solving the underlying problem.

This is why we should ask ourselves first, before looking at the tools, what is it we want to achieve? Only then can we consider the necessary changes that bring us the results we want and are good for the world.

Thought 4: "Technology will always remain the solution rather than become the problem."

We may often think that whatever innovations are brought to the table, they can only do good in the world. After all, why would innovators develop something that's not good for our precious Planet Earth? Certainly, most great things were developed with a good intention, but good intentions themselves don't always guarantee good outcomes too. You can probably recall at least one occasion when you wholeheartedly wanted to do something good, but for some reason, it turned out terribly wrong. Innovators sometimes struggle with this too.

We now know that today's solutions often become (or contribute to) tomorrow's problems.

It's because we fully believe that we can always control the technology, while in reality, it's often the reverse. We are not always aware of the long-term impact of our solutions, and even if they do exceptionally well in our world at the time, they can turn into a massive problem in the future. This is why we should always ask: If we decide to accept and use this "free" technology, what may it lead to if we allow it to walk through the doors of freedom?

Thought 5: "In the end, I decide."

Big tech firms try very hard to give us the feeling that we are in control because if we feel in control, we believe we can do anything we want.

While there are cases where this is undoubtedly true, in reality, our options and alternatives are usually less "freely chosen" than they first appear. For example, if you don't like how a particular service is treating your data, you can always stop using the service. This sounds very simple, while we all know that coming to such a decision usually includes many other complexities and issues. So, if you decide to keep using that service, unfortunately, it means that you also keep giving consent to data exploitation and mishandling. It's simply unethical to offer such ultimatums.

This is why Simple Analytics will never give anyone such ultimatums. It's our business to protect privacy while delivering fast and accessible insights. We never, ever track any visitors, and we do not own your data. We have packages for every type of budget. You always own your data, and you can decide if you want to download it or delete it whenever you want. And yes, that comes with a monetary price. But we believe that's a price worth paying for preserving everyone's fundamental right to freedom and privacy.

What about you? Can you spot some of the reasons why you have agreed to use technology even if you knew it could steal your privacy? Let us know!

This article was inspired by an exciting researcher called Gary Marx. A while ago, I came across an academic paper by this American sociologist and found that his findings are very applicable to the current times. Can you spot the thinking fallacies around you? I took the liberty to simplify some of his thoughts and rewrite them in a more understandable, conversational tone. If you like this article and you're interested in learning more about his work on mass surveillance, check out Gary's website: https://web.mit.edu/gtmarx/www/garyhome.html

Gary said it perfectly: "The conditions of modern life are often such that one can hardly avoid choosing actions that are subject to surveillance. While the surveillance may be justified on other grounds, it is disingenuous to call it a free and informed choice."

If you enjoyed reading this article and are interested in learning more about privacy, consider subscribing to the privacy newsletter.

Every month we give you short, sweet, and insightful privacy updates and help you stay in the know. Needless to say, our emails never track anything, ever. We want to spread the word and help more people become aware of the dangers of data exploitation. Also, we'll suggest ethical solutions or other privacy-focused apps. You can subscribe for free at theprivacynewsletter.com.